In the fast-evolving world of software development, 2025 is shaping up to be the year where serverless architectures and edge computing take center stage. Traditional monolithic deployments can no longer keep pace with global user bases demanding low latency, high availability, and cost-effectiveness. By distributing compute and storage closer to the end-user, organizations can minimize bottlenecks, scale seamlessly, and maintain robust reliability. This comprehensive guide walks you through serverless principles, edge computing models, best practices, and real-world examples to help you architect next-generation applications that stand the test of time.

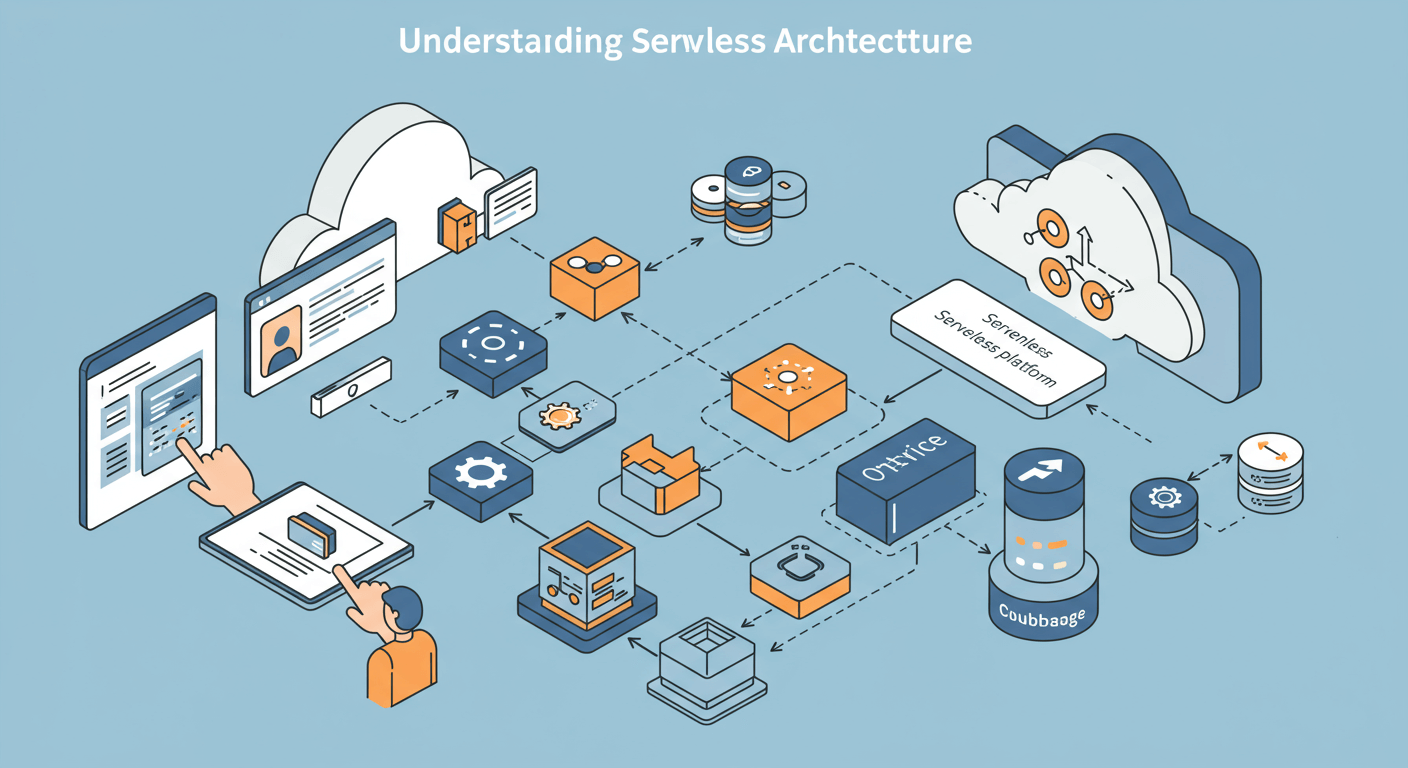

Understanding Serverless Architecture

Serverless computing is like having an infinitely scalable, self-managing infrastructure team at your fingertips. It abstracts away all the server hassle, letting developers zero in on what they do best: writing code. With services like AWS Lambda or Azure Functions, your code scales seamlessly with incoming traffic—no more frantic provisioning or overpaying for idle servers. You just deploy individual functions that spring to life on-demand, and you only pay for the exact milliseconds they run. This pay-as-you-go model isn’t just cost-effective; it’s a game-changer for agility, wiping out the need for capacity planning and accelerating release cycles. And in 2025, serverless has truly grown up, offering robust features like provisioned concurrency and stateful workflows that even support mission-critical apps. But here’s the key: this flexibility demands a shift in how we ensure quality. That’s where Mastering Software Testing becomes non-negotiable. With function-based architecture, testing strategies must evolve toward isolated unit tests, event-driven validation, and deeper observability integration to guarantee that these nimble, decentralized systems don’t just scale—they perform reliably and securely under real-world conditions.

The Rise of Edge Computing

Edge computing pushes compute resources closer to where data is generated—on base stations, routers, IoT devices, or dedicated edge data centers. By processing data at the edge, you minimize latency, reduce bandwidth usage, and improve user experiences for applications like real-time analytics, AR/VR, and autonomous systems. Leading cloud providers now offer integrated edge services—AWS IoT Greengrass, Azure Edge Zones, and Google Distributed Cloud Edge—letting you deploy functions and containers at global edge nodes. In 2024, advancements in micro data centers and 5G connectivity accelerate edge adoption, unlocking new possibilities for ultra-low latency applications.

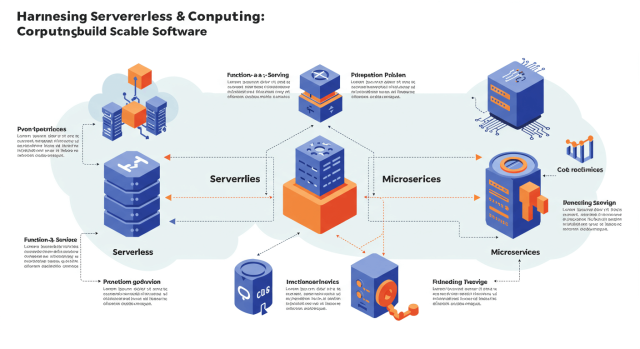

Combining Serverless and Edge for Maximum Impact

When you marry serverless and edge computing, you get the best of both worlds: limitless scale in the cloud paired with lightning-fast responses at the edge. A common pattern is to run your core business logic and heavy processing in a serverless cloud region while deploying lightweight filter or pre-processing functions at the edge. For example, an e-commerce platform can handle cart operations and payment flows with Lambda functions in a central region, while edge functions on Cloudflare Workers or AWS Lambda@Edge manage geolocation routing, A/B tests, or image optimization. This hybrid approach optimizes performance, reduces costs, and ensures consistent user experiences globally.

Architecting for Scalability

Scalability in serverless and edge environments hinges on event-driven design. Break down your application into small, independent functions triggered by HTTP requests, message queues, or IoT telemetry. Embrace asynchronous workflows with managed services like AWS Step Functions or Azure Durable Functions to coordinate complex business processes. At the edge, use CDN-backed function triggers to handle high-throughput scenarios such as video streaming or real-time notifications. Partition data intelligently with cache layers like AWS CloudFront or Azure CDN and global databases such as DynamoDB Global Tables or Cosmos DB to maintain low-latency reads and writes worldwide.

Ensuring Resilience and Fault Tolerance

In distributed architectures, fault tolerance is critical. Implement automated retries, circuit breakers, and exponential backoff in your functions to handle transient errors gracefully. Use multiple edge locations or availability zones to avoid single points of failure. For stateful workflows, consider event sourcing and durable queues (e.g., AWS SQS, Azure Service Bus) to decouple producers and consumers. Monitor function cold starts, error rates, and latency with built-in observability tools like AWS X-Ray or Google Cloud Trace. 2024 also brings enhanced SLA guarantees and regional controls, allowing fine-grained configuration of failover behaviors across serverless and edge resources.

Security and Compliance at the Edge

Security in a hybrid serverless-edge setup requires a zero-trust mindset. Authenticate every request at the edge using JWT tokens or OAuth 2.0 and enforce authorization with API gateways. Encrypt data in transit with TLS and at rest using provider-managed key stores. Leverage edge-based Web Application Firewalls (WAFs) and DDoS protection to filter malicious traffic before it hits your core infrastructure. For regulated industries, ensure compliance by configuring data residency rules at edge PoPs and central regions. Tools like AWS IAM, Azure AD, and Google Cloud IAM now offer unified policies across serverless and edge endpoints for streamlined governance.

Optimizing Performance and Cost

Performance tuning in serverless and edge contexts revolves around cold start mitigation and resource allocation. Provisioned concurrency or pre-warmed edge functions can reduce latency spikes. Adjust memory and CPU allocations to match your workload requirements—more memory often speeds up execution but increases cost. Employ granular monitoring and cost analytics tools to identify expensive hot spots. On the edge, use content compression and intelligent caching strategies to minimize data transfer. In 2024, FinOps practices extend to serverless and edge, with native cost anomaly detection and budget alerts built into cloud consoles.

Developer Tooling and CI/CD Best Practices

Modern development workflows embrace infrastructure as code (IaC) with Terraform, AWS CloudFormation, or Azure Bicep to define serverless and edge resources declaratively. Leverage CI/CD pipelines (GitHub Actions, GitLab CI, Azure Pipelines) for automated testing, security scans, and blue/green deployments. Use emulators and local runtimes (e.g., AWS SAM CLI, Azure Functions Core Tools) to validate functions before pushing to cloud or edge environments. Adopt GitOps approaches to manage configuration drift and rollback safely. In 2025, multi-cloud serverless frameworks like Serverless Framework V3 and Azure Durable Functions for Kubernetes support unified deployments across cloud regions and on-prem edge clusters.

Real-World Case Study: Smart Retail Application

Consider a global retail company that implemented a hybrid serverless-edge solution to power its online storefront and in-store experiences. Edge functions handle localized pricing, inventory checks, and personalized promotions to deliver sub-50ms responses for shoppers across continents. Core order processing runs as serverless functions in a primary region, integrating seamlessly with backend ERP and payment systems. The architecture reduced infrastructure costs by 40% compared to reserved instances, improved site performance by 60%, and simplified compliance by enforcing data residency at edge PoPs. Advanced telemetry from both cloud and edge provided unified observability, enabling rapid troubleshooting and capacity planning.

Challenges and Mitigation Strategies

While compelling, hybrid serverless-edge architectures introduce complexity. Observability sprawl, distributed security policies, and vendor lock-in concerns can slow adoption. To mitigate these challenges, centralize logging and metrics in a purpose-built monitoring platform (e.g., Datadog, New Relic) that ingests data from both edge and cloud. Standardize IAM roles and network policies across environments. Adopt open-source frameworks like OpenFaaS or Knative for portability. Conduct regular chaos engineering experiments to validate failover and resilience under real-world conditions. Build a cross-functional team skilled in cloud and network infrastructure to bridge the gap between core and edge operations.

Future Trends: Beyond 2025

Looking ahead, the serverless and edge landscape will continue to evolve with distributed AI, confidential computing, and 6G connectivity. Expect to see model-serving functions at the edge, enabling real-time inference for computer vision, natural language processing, and predictive analytics. Confidential computing enclaves at micro data centers will offer hardware-backed security for sensitive data processing. 6G and advanced mesh networks will extend edge reach into remote and industrial locations. As hybrid architectures become mainstream, standardized service meshes and federated control planes will simplify orchestration and policy enforcement across global deployments.

Conclusion

Serverless and edge computing together represent a paradigm shift in how we build, deploy, and scale software. By decoupling functions from infrastructure and distributing workloads closer to users, you unlock unparalleled performance, cost efficiency, and resilience. In 2025, with mature platforms, robust security controls, and comprehensive tooling, there’s never been a better time to embrace hybrid architectures. Start small with proof-of-concept functions at the edge, integrate them with your existing serverless workflows, and iterate rapidly. The future of software is distributed—are you ready to harness its full potential?